こんにちは。

Azureストレージの Blobを Windowsから利用するのに、プログラム言語にはそれぞれの言語用の APIが出ていて、OS用には Powershellのモジュールがあって、コマンドプロンプト用には(Powershellでも使えるけど)、azコマンドが用意されています。

ですので、MS-DOSの頃からの伝統的なコマンドバッチスクリプトで Blobを使うには azコマンドを利用することになりますが、機能が多いため書式が冗長なんですよね。

単に Blobストレージにファイルを入れたり出したりしたいだけなのにな…ってことが結構多くて「もっと簡単なのが欲しい」と思っていましたらば、きっと世界にもそういうユーザーが多かったんでしょうね。

azcopy.exe ってのがマイクロソフトにより配布されております。

こちらからダウンロードできます。

AzCopy を使ってみる

これから Windows Server 2022でやってみますので「Windows 64 ビット」というリンクからダウンロードをします。

ダウンロードが完了すると、azcopy_windows_amd64_10.16.1.zipというファイルができました。

2022年11月1日時点では、10.16.1というバージョンでした。

(結構頻繁にアップデートされます。)

ダウンロードファイルの圧縮を解くと azcopy.exe ができあがりますので、パスが通っている所に置くのが良いでしょう。

私はとりあえず D:¥work フォルダの下に置きました。

さて、AzCopyを使いたいのは山々ですがこの円安の折、お勉強のためだけに Azureの課金を払うのは辛いので、Azureストレージエミュレーターの Azuriteを使いましょう。

Azuriteは以下でインストールの仕方を書いています。

下記では Ubuntu Server 22.04にインストールしていますが、Node.jsで動くものですので多分 Windows Serverでも動きます。

「Azure Storageエミュレーター Azuriteを使う」

ここで使う Azuriteの環境は、Ubuntu Server 22.04.1・Node.js 18.12.0・Azurite 3.20.1 と全て最新版を使っていますけど、チョイ古いものでも変わらないと思います。

以下、Azuriteが UbuntuServer2204 というマシンにインストールしてあって、既に稼働状態にある前提で進めます。

早速 AzCopyでファイルを Azuriteに出し入れしてみようや!

と思ったのも束の間、新しいバージョンの AzCopyは Azure ADに参加するか SAS(Shared Access Signatures)が必要なようです。

Azure ADに参加しているはずもないので、SASを使うしかないのですが、Azuriteのサイトには接続文字列は書いてあるものの、SASは書いてありません。

本物の Azureでは SASを作る作業がありましたので、Azuriteでも実環境で生成しないといけないのかも知れません。

Azuriteではどうすれば良いのかと思ったら、Storage Explorerというマイクロソフト謹製ツールがあると作れますので、これを導入しましょう。

こちらのサイトで[今すぐダウンロード]というボタン様のリンクをクリックするとダウンロードできます。

Azure Storage Explorer

ダウンロードが完了すると StorageExplorer.exe というファイルができました。

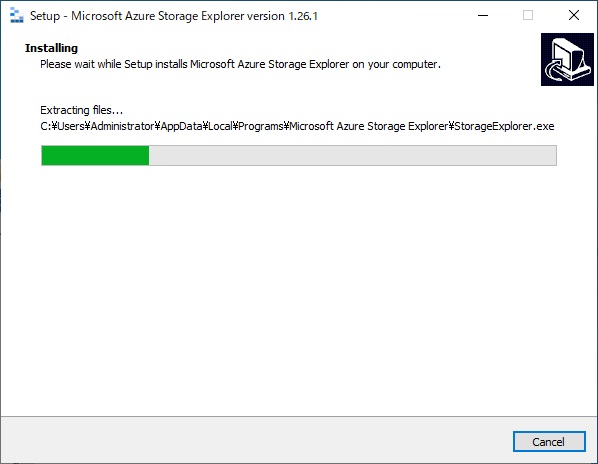

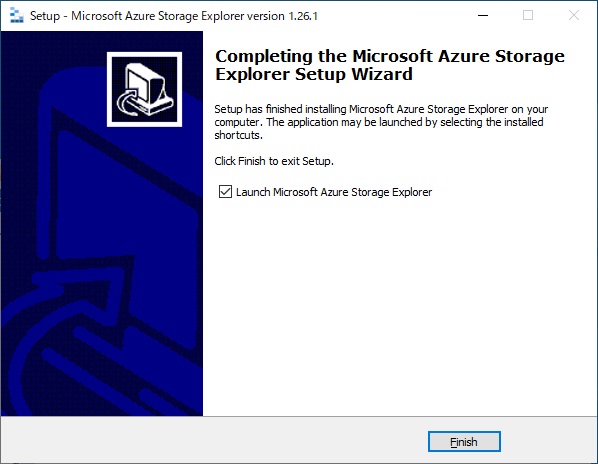

では Storage Explorerをインストールしましょう。

StorageExplorer.exeをダブルクリックして実行します。

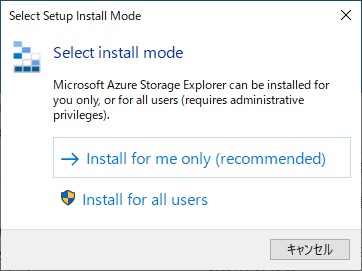

環境全体で使えるようにするか、インストールしたユーザーだけにするか聞いてきます。

環境全体は非推奨のようですので[Install for me only]をクリックします。

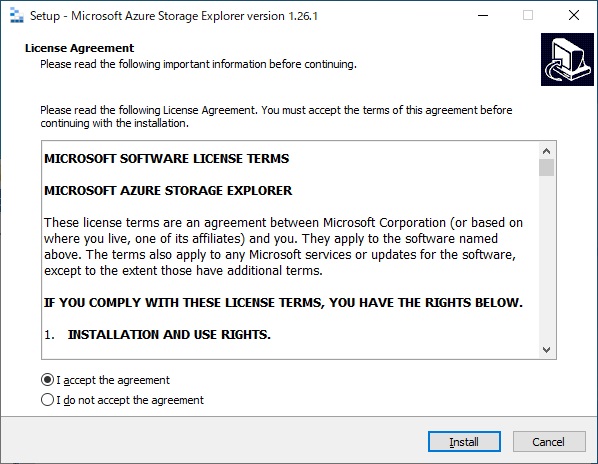

[I accept the ageement]を選択して、Installを押します。

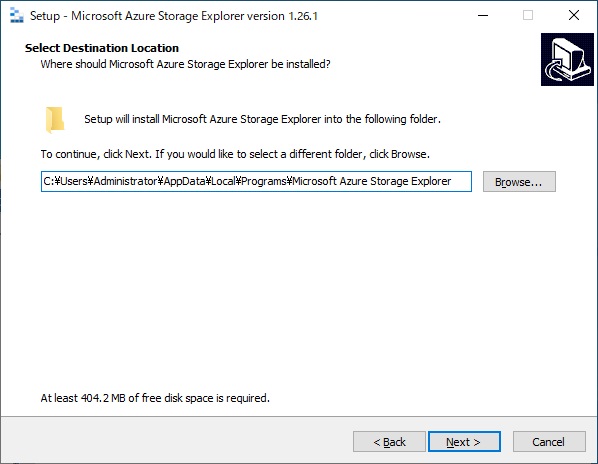

インストール場所はこのままで良いでしょう。

Nextを押します。

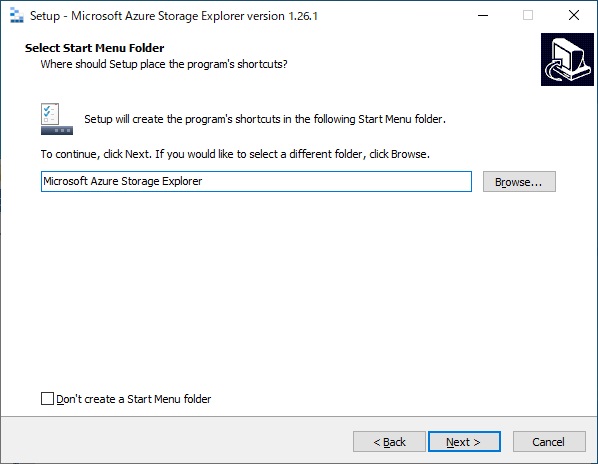

スタートメニューのフォルダはこのままで良いでしょう。

Nextを押します。

待ちます。

Finishを押します。

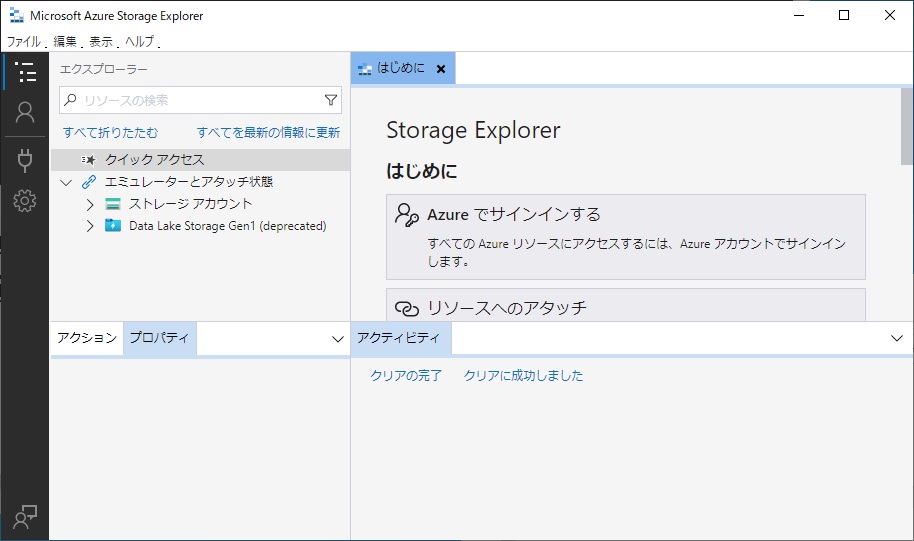

自動的に Storage Explorerが立ち上がってきます。

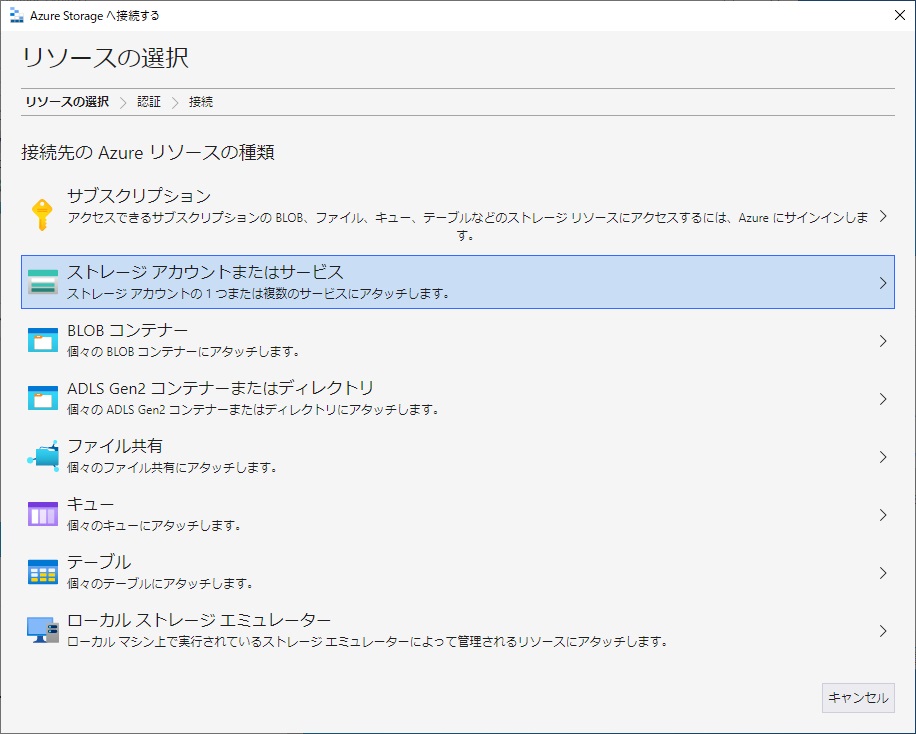

画面左にある電源プラグのアイコンをクリックします。

[ストレージアカウントまたはサービス]をクリックします。

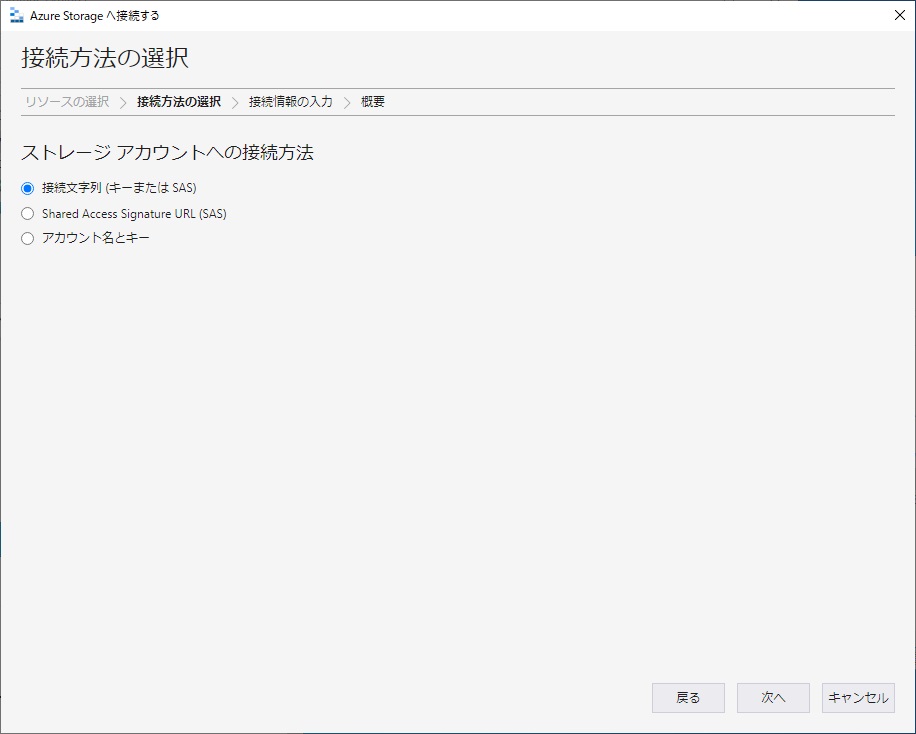

[接続文字列(キーまたはSAS)]を選択し、次へを押します。

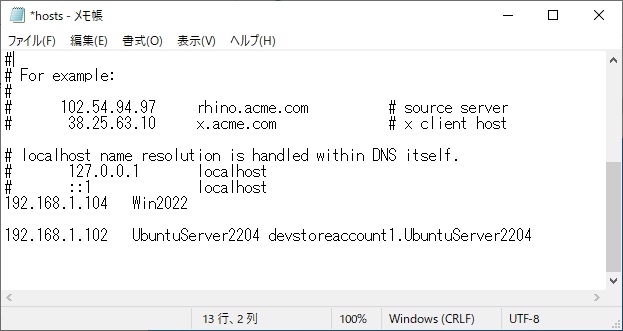

次の手順で、Azuriteがインストールされているサーバーのマシン名を指定する必要があるのですが、ここで一工夫します。

Azureストレージの URLの構成は https://ストレージアカウント名.Azureのドメイン というように、ストレージアカウントをマシン名とするようになっています。

そこでここで作る環境でもそれに準じた形にするため、

C:¥windows¥system32¥drivers¥etc¥hosts ファイルに以下のように、

devstoreaccount1.UbuntuServer2204

とマシン名を書いてやっています。

では続きです。

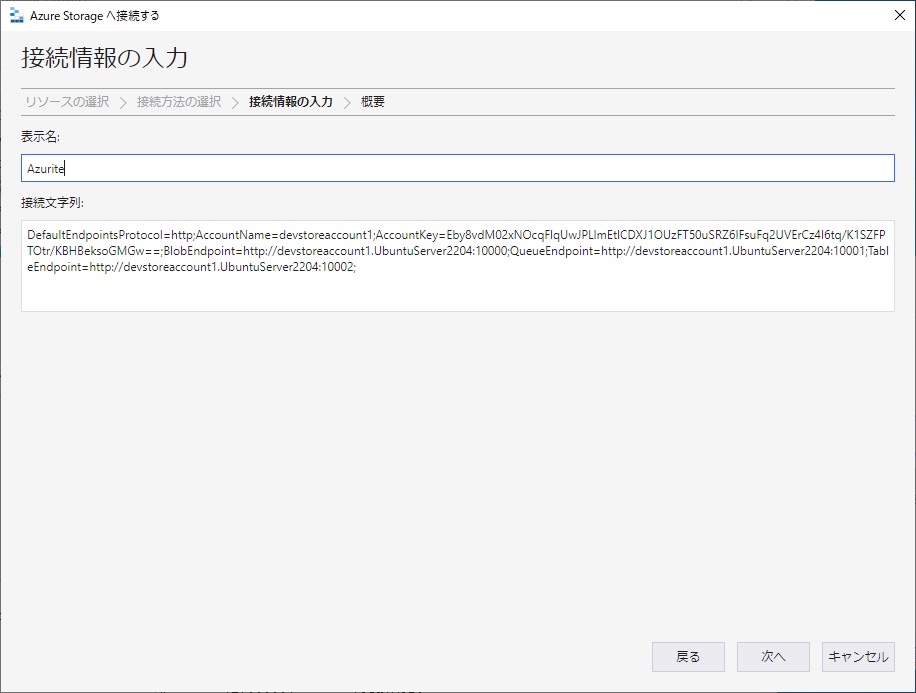

[表示名:]は好きなものを

[接続文字列:]には、Azuriteの説明にある接続文字列のうち[The full HTTPS connection string is:]に書いてある以下のものを、この環境に併せて変更したものを書き入れます。

DefaultEndpointsProtocol=http;AccountName=devstoreaccount1;AccountKey=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==;BlobEndpoint=http://devstoreaccount1.UbuntuServer2204:10000;QueueEndpoint=http://devstoreaccount1.UbuntuServer2204:10001;TableEndpoint=http://devstoreaccount1.UbuntuServer2204:10002;

次へを押します。

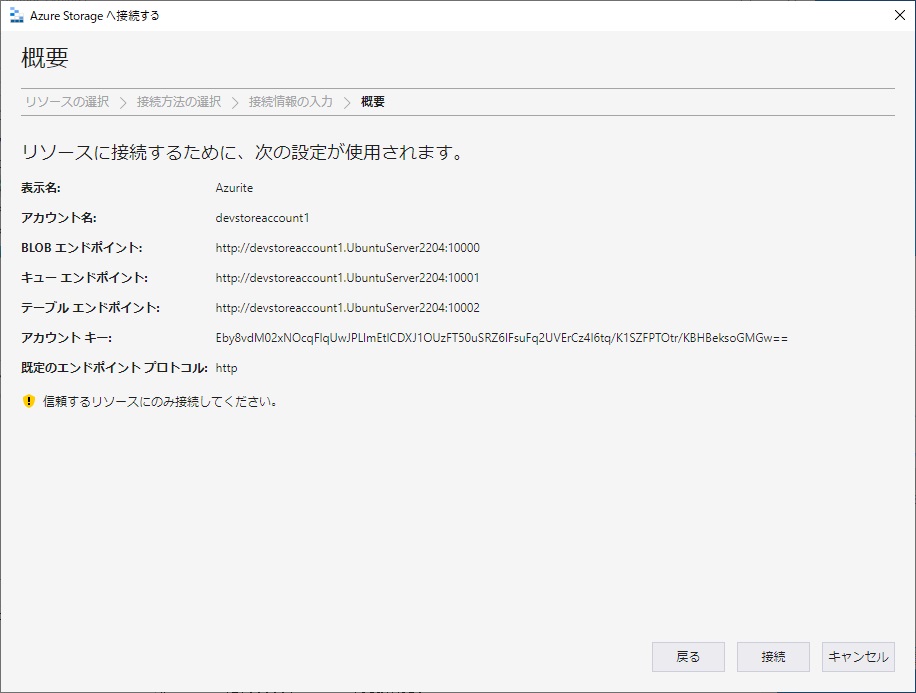

接続を押します。

上手く行くと、新しく[Azurite(key)]という枝ができまして、その下に Blob Containers / Queues / Tables と3つの要素が出てきます。

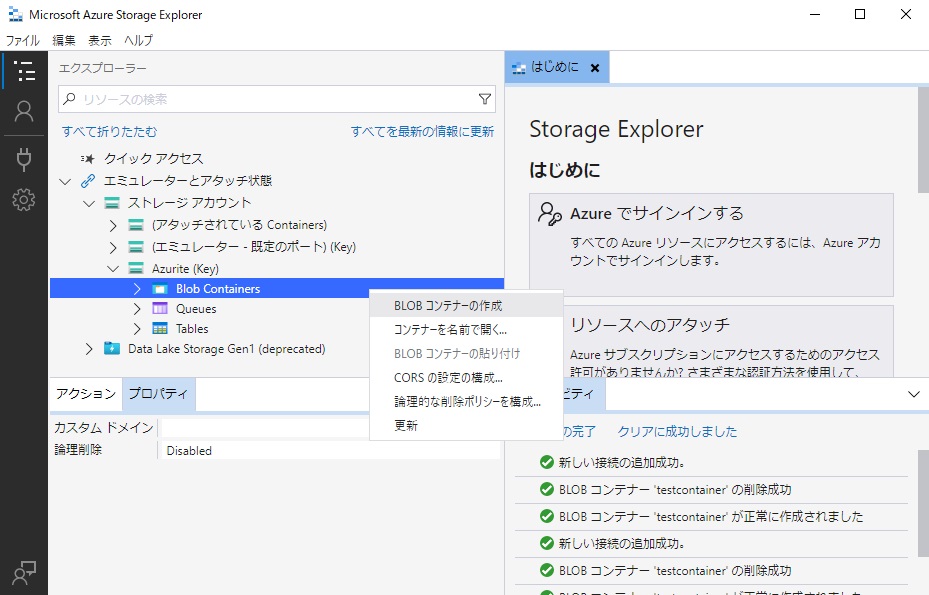

[Blob Containers]で右クリックするとメニューが出ますので[BLOBコンテナーの作成]を選択します。

新規コンテナー名の入力ができますので「testcontainer」としました。

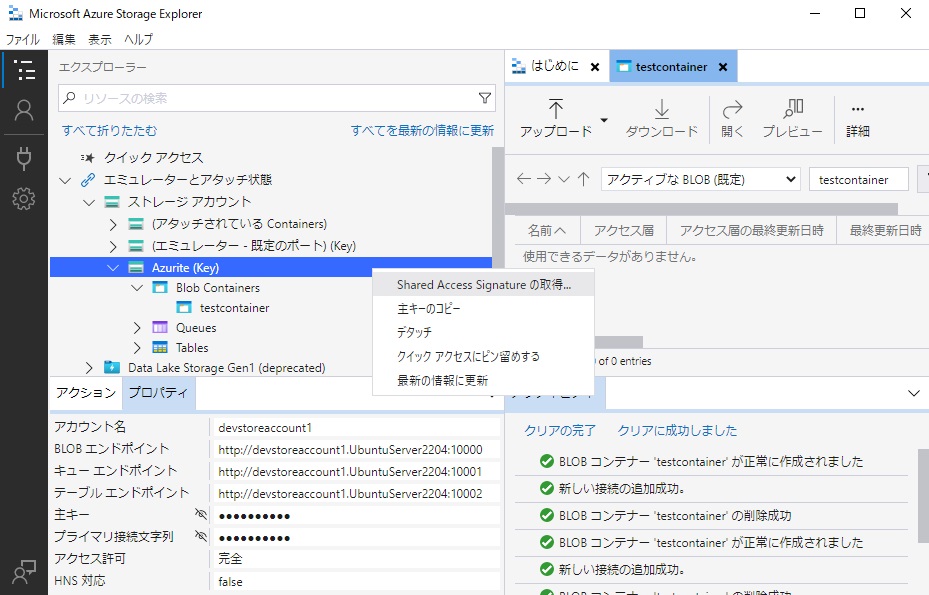

今度は[Azurite(key)]で右クリックするとメニューが出ますので[Shared Access Signatureの取得]を選択します。

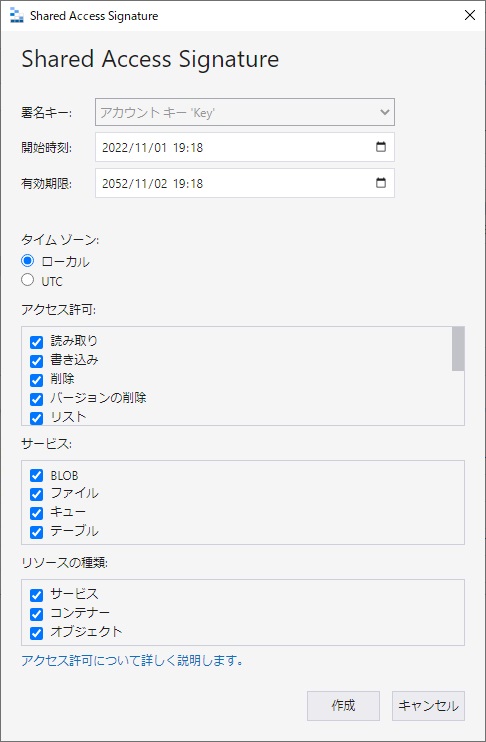

SASキーの作成画面になります。

[有効期限:]を30年後までにしました。

[アクセス許可:]は全てチェックを入れましょう。

作成を押します。

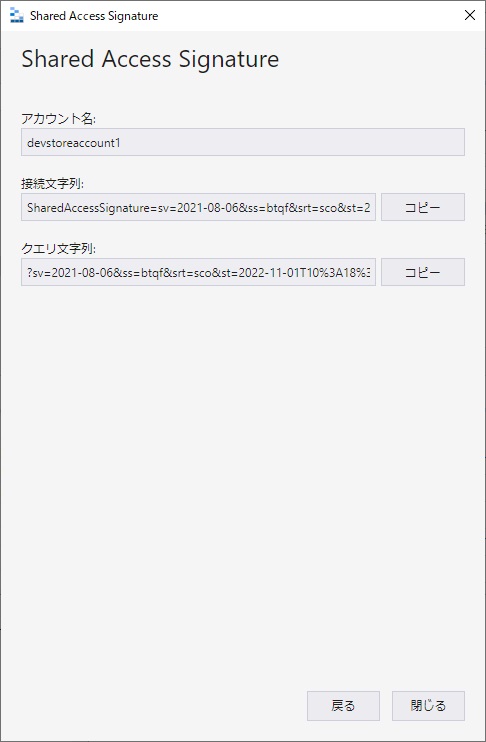

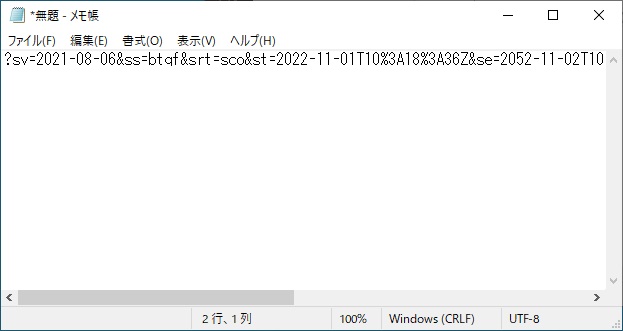

使うのは下の[クエリ文字列:]ですので、メモ帳にコピーしておきました。

閉じるを押します。

SASキーはこんな感じです。

?sv=2021-08-06&ss=btqf&srt=sco&st=2022-11-01T10%3A18%3A36Z&se=2052-11-02T10%3A18%3A00Z&sp=rwdxftlacup&sig=xoNeblE7kxo%2FXxo5wHvyYrUGNBzpGjkD4DJhiVc64g0%3D

これでやっと AzCopyの準備ができました。

azcopy.exe(azcopyコマンド)を何も指定しないで実行してみます。

D:\work>azcopy

AzCopy 10.16.1

Project URL: github.com/Azure/azure-storage-azcopy

AzCopy is a command line tool that moves data into and out of Azure Storage.

To report issues or to learn more about the tool, go to github.com/Azure/azure-storage-azcopy

The general format of the commands is: 'azcopy [command] [arguments] --[flag-name]=[flag-value]'.

Usage:

azcopy [command]

Available Commands:

bench Performs a performance benchmark

completion Generate the autocompletion script for the specified shell

copy Copies source data to a destination location

doc Generates documentation for the tool in Markdown format

env Shows the environment variables that you can use to configure the behavior of AzCopy.

help Help about any command

jobs Sub-commands related to managing jobs

list List the entities in a given resource

login Log in to Azure Active Directory (AD) to access Azure Storage resources.

logout Log out to terminate access to Azure Storage resources.

make Create a container or file share.

remove Delete blobs or files from an Azure storage account

set-properties (Preview) Given a location, change all the valid system properties of that storage (blob or file)

sync Replicate source to the destination location

Flags:

--cap-mbps float Caps the transfer rate, in megabits per second. Moment-by-moment throughput might vary slightly from the cap. If this option is set to zero, or it is omitted, the throughput isn't capped.

-h, --help help for azcopy

--log-level string Define the log verbosity for the log file, available levels: INFO(all requests/responses), WARNING(slow responses), ERROR(only failed requests), and NONE(no output logs). (default 'INFO'). (default "INFO")

--output-level string Define the output verbosity. Available levels: essential, quiet. (default "default")

--output-type string Format of the command's output. The choices include: text, json. The default value is 'text'. (default "text")

--trusted-microsoft-suffixes string Specifies additional domain suffixes where Azure Active Directory login tokens may be sent. The default is '*.core.windows.net;*.core.chinacloudapi.cn;*.core.cloudapi.de;*.core.usgovcloudapi.net;*.storage.azure.net'. Any listed here are added to the default. For security, you should only put Microsoft Azure domains here. Separate multiple entries with semi-colons.

-v, --version version for azcopy

Use "azcopy [command] --help" for more information about a command.

azcopyコマンドのサブコマンド copy のマニュアルを表示してみます。

D:\work>azcopy copy

Error: wrong number of arguments, please refer to the help page on usage of this command

Usage:

azcopy copy [source] [destination] [flags]

Aliases:

copy, cp, c

Examples:

Upload a single file by using OAuth authentication. If you have not yet logged into AzCopy, please run the azcopy login command before you run the following command.

- azcopy cp "/path/to/file.txt" "https://[account].blob.core.windows.net/[container]/[path/to/blob]"

Same as above, but this time also compute MD5 hash of the file content and save it as the blob's Content-MD5 property:

- azcopy cp "/path/to/file.txt" "https://[account].blob.core.windows.net/[container]/[path/to/blob]" --put-md5

Upload a single file by using a SAS token:

- azcopy cp "/path/to/file.txt" "https://[account].blob.core.windows.net/[container]/[path/to/blob]?[SAS]"

Upload a single file by using a SAS token and piping (block blobs only):

- cat "/path/to/file.txt" | azcopy cp "https://[account].blob.core.windows.net/[container]/[path/to/blob]?[SAS]" --from-to PipeBlob

Upload a single file by using OAuth and piping (block blobs only):

- cat "/path/to/file.txt" | azcopy cp "https://[account].blob.core.windows.net/[container]/[path/to/blob]" --from-to PipeBlob

Upload an entire directory by using a SAS token:

- azcopy cp "/path/to/dir" "https://[account].blob.core.windows.net/[container]/[path/to/directory]?[SAS]" --recursive=true

or

- azcopy cp "/path/to/dir" "https://[account].blob.core.windows.net/[container]/[path/to/directory]?[SAS]" --recursive=true --put-md5

Upload a set of files by using a SAS token and wildcard (*) characters:

- azcopy cp "/path/*foo/*bar/*.pdf" "https://[account].blob.core.windows.net/[container]/[path/to/directory]?[SAS]"

Upload files and directories by using a SAS token and wildcard (*) characters:

- azcopy cp "/path/*foo/*bar*" "https://[account].blob.core.windows.net/[container]/[path/to/directory]?[SAS]" --recursive=true

Upload files and directories to Azure Storage account and set the query-string encoded tags on the blob.

- To set tags {key = "bla bla", val = "foo"} and {key = "bla bla 2", val = "bar"}, use the following syntax :

- azcopy cp "/path/*foo/*bar*" "https://[account].blob.core.windows.net/[container]/[path/to/directory]?[SAS]" --blob-tags="bla%20bla=foo&bla%20bla%202=bar"

- Keys and values are URL encoded and the key-value pairs are separated by an ampersand('&')

- https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-index-how-to?tabs=azure-portal

- While setting tags on the blobs, there are additional permissions('t' for tags) in SAS without which the service will give authorization error back.

Download a single file by using OAuth authentication. If you have not yet logged into AzCopy, please run the azcopy login command before you run the following command.

- azcopy cp "https://[account].blob.core.windows.net/[container]/[path/to/blob]" "/path/to/file.txt"

Download a single file by using a SAS token:

- azcopy cp "https://[account].blob.core.windows.net/[container]/[path/to/blob]?[SAS]" "/path/to/file.txt"

Download a single file by using a SAS token and then piping the output to a file (block blobs only):

- azcopy cp "https://[account].blob.core.windows.net/[container]/[path/to/blob]?[SAS]" --from-to BlobPipe > "/path/to/file.txt"

Download a single file by using OAuth and then piping the output to a file (block blobs only):

- azcopy cp "https://[account].blob.core.windows.net/[container]/[path/to/blob]" --from-to BlobPipe > "/path/to/file.txt"

Download an entire directory by using a SAS token:

- azcopy cp "https://[account].blob.core.windows.net/[container]/[path/to/directory]?[SAS]" "/path/to/dir" --recursive=true

A note about using a wildcard character (*) in URLs:

There's only two supported ways to use a wildcard character in a URL.

- You can use one just after the final forward slash (/) of a URL. This copies all of the files in a directory directly to the destination without placing them into a subdirectory.

- You can also use one in the name of a container as long as the URL refers only to a container and not to a blob. You can use this approach to obtain files from a subset of containers.

Download the contents of a directory without copying the containing directory itself.

- azcopy cp "https://[srcaccount].blob.core.windows.net/[container]/[path/to/folder]/*?[SAS]" "/path/to/dir"

Download an entire storage account.

- azcopy cp "https://[srcaccount].blob.core.windows.net/" "/path/to/dir" --recursive

Download a subset of containers within a storage account by using a wildcard symbol (*) in the container name.

- azcopy cp "https://[srcaccount].blob.core.windows.net/[container*name]" "/path/to/dir" --recursive

Download all the versions of a blob from Azure Storage to local directory. Ensure that source is a valid blob, destination is a local folder and versionidsFile which takes in a path to the file where each version is written on a separate line. All the specified versions will get downloaded in the destination folder specified.

- azcopy cp "https://[srcaccount].blob.core.windows.net/[containername]/[blobname]" "/path/to/dir" --list-of-versions="/another/path/to/dir/[versionidsFile]"

Copy a single blob to another blob by using a SAS token.

- azcopy cp "https://[srcaccount].blob.core.windows.net/[container]/[path/to/blob]?[SAS]" "https://[destaccount].blob.core.windows.net/[container]/[path/to/blob]?[SAS]"

Copy a single blob to another blob by using a SAS token and an OAuth token. You have to use a SAS token at the end of the source account URL if you do not have the right permissions to read it with the identity used for login.

- azcopy cp "https://[srcaccount].blob.core.windows.net/[container]/[path/to/blob]?[SAS]" "https://[destaccount].blob.core.windows.net/[container]/[path/to/blob]"

Copy one blob virtual directory to another by using a SAS token:

- azcopy cp "https://[srcaccount].blob.core.windows.net/[container]/[path/to/directory]?[SAS]" "https://[destaccount].blob.core.windows.net/[container]/[path/to/directory]?[SAS]" --recursive=true

Copy all blob containers, directories, and blobs from storage account to another by using a SAS token:

- azcopy cp "https://[srcaccount].blob.core.windows.net?[SAS]" "https://[destaccount].blob.core.windows.net?[SAS]" --recursive=true

Copy a single object to Blob Storage from Amazon Web Services (AWS) S3 by using an access key and a SAS token. First, set the environment variable AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY for AWS S3 source.

- azcopy cp "https://s3.amazonaws.com/[bucket]/[object]" "https://[destaccount].blob.core.windows.net/[container]/[path/to/blob]?[SAS]"

Copy an entire directory to Blob Storage from AWS S3 by using an access key and a SAS token. First, set the environment variable AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY for AWS S3 source.

- azcopy cp "https://s3.amazonaws.com/[bucket]/[folder]" "https://[destaccount].blob.core.windows.net/[container]/[path/to/directory]?[SAS]" --recursive=true

Please refer to https://docs.aws.amazon.com/AmazonS3/latest/user-guide/using-folders.html to better understand the [folder] placeholder.

Copy all buckets to Blob Storage from Amazon Web Services (AWS) by using an access key and a SAS token. First, set the environment variable AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY for AWS S3 source.

- azcopy cp "https://s3.amazonaws.com/" "https://[destaccount].blob.core.windows.net?[SAS]" --recursive=true

Copy all buckets to Blob Storage from an Amazon Web Services (AWS) region by using an access key and a SAS token. First, set the environment variable AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY for AWS S3 source.

- azcopy cp "https://s3-[region].amazonaws.com/" "https://[destaccount].blob.core.windows.net?[SAS]" --recursive=true

Copy a subset of buckets by using a wildcard symbol (*) in the bucket name. Like the previous examples, you'll need an access key and a SAS token. Make sure to set the environment variable AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY for AWS S3 source.

- azcopy cp "https://s3.amazonaws.com/[bucket*name]/" "https://[destaccount].blob.core.windows.net?[SAS]" --recursive=true

Copy blobs from one blob storage to another and preserve the tags from source. To preserve tags, use the following syntax :

- azcopy cp "https://[account].blob.core.windows.net/[source_container]/[path/to/directory]?[SAS]" "https://[account].blob.core.windows.net/[destination_container]/[path/to/directory]?[SAS]" --s2s-preserve-blob-tags=true

Transfer files and directories to Azure Storage account and set the given query-string encoded tags on the blob.

- To set tags {key = "bla bla", val = "foo"} and {key = "bla bla 2", val = "bar"}, use the following syntax :

- azcopy cp "https://[account].blob.core.windows.net/[source_container]/[path/to/directory]?[SAS]" "https://[account].blob.core.windows.net/[destination_container]/[path/to/directory]?[SAS]" --blob-tags="bla%20bla=foo&bla%20bla%202=bar"

- Keys and values are URL encoded and the key-value pairs are separated by an ampersand('&')

- https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-index-how-to?tabs=azure-portal

- While setting tags on the blobs, there are additional permissions('t' for tags) in SAS without which the service will give authorization error back.

Copy a single object to Blob Storage from Google Cloud Storage (GCS) by using a service account key and a SAS token. First, set the environment variable GOOGLE_APPLICATION_CREDENTIALS for GCS source.

- azcopy cp "https://storage.cloud.google.com/[bucket]/[object]" "https://[destaccount].blob.core.windows.net/[container]/[path/to/blob]?[SAS]"

Copy an entire directory to Blob Storage from Google Cloud Storage (GCS) by using a service account key and a SAS token. First, set the environment variable GOOGLE_APPLICATION_CREDENTIALS for GCS source.

- azcopy cp "https://storage.cloud.google.com/[bucket]/[folder]" "https://[destaccount].blob.core.windows.net/[container]/[path/to/directory]?[SAS]" --recursive=true

Copy an entire bucket to Blob Storage from Google Cloud Storage (GCS) by using a service account key and a SAS token. First, set the environment variable GOOGLE_APPLICATION_CREDENTIALS for GCS source.

- azcopy cp "https://storage.cloud.google.com/[bucket]" "https://[destaccount].blob.core.windows.net/?[SAS]" --recursive=true

Copy all buckets to Blob Storage from Google Cloud Storage (GCS) by using a service account key and a SAS token. First, set the environment variables GOOGLE_APPLICATION_CREDENTIALS and GOOGLE_CLOUD_PROJECT= for GCS source

- azcopy cp "https://storage.cloud.google.com/" "https://[destaccount].blob.core.windows.net/?[SAS]" --recursive=true

Copy a subset of buckets by using a wildcard symbol (*) in the bucket name from Google Cloud Storage (GCS) by using a service account key and a SAS token for destination. First, set the environment variables GOOGLE_APPLICATION_CREDENTIALS and GOOGLE_CLOUD_PROJECT= for GCS source

- azcopy cp "https://storage.cloud.google.com/[bucket*name]/" "https://[destaccount].blob.core.windows.net/?[SAS]" --recursive=true

Flags:

--as-subdir True by default. Places folder sources as subdirectories under the destination. (default true)

--backup Activates Windows' SeBackupPrivilege for uploads, or SeRestorePrivilege for downloads, to allow AzCopy to see read all files, regardless of their file system permissions, and to restore all permissions. Requires that the account running AzCopy already has these permissions (e.g. has Administrator rights or is a member of the 'Backup Operators' group). All this flag does is activate privileges that the account already has

--blob-tags string Set tags on blobs to categorize data in your storage account

--blob-type string Defines the type of blob at the destination. This is used for uploading blobs and when copying between accounts (default 'Detect'). Valid values include 'Detect', 'BlockBlob', 'PageBlob', and 'AppendBlob'. When copying between accounts, a value of 'Detect' causes AzCopy to use the type of source blob to determine the type of the destination blob. When uploading a file, 'Detect' determines if the file is a VHD or a VHDX file based on the file extension. If the file is either a VHD or VHDX file, AzCopy treats the file as a page blob. (default "Detect")

--block-blob-tier string upload block blob to Azure Storage using this blob tier. (default "None")

--block-size-mb float Use this block size (specified in MiB) when uploading to Azure Storage, and downloading from Azure Storage. The default value is automatically calculated based on file size. Decimal fractions are allowed (For example: 0.25).

--cache-control string Set the cache-control header. Returned on download.

--check-length Check the length of a file on the destination after the transfer. If there is a mismatch between source and destination, the transfer is marked as failed. (default true)

--check-md5 string Specifies how strictly MD5 hashes should be validated when downloading. Only available when downloading. Available options: NoCheck, LogOnly, FailIfDifferent, FailIfDifferentOrMissing. (default 'FailIfDifferent') (default "FailIfDifferent")

--content-disposition string Set the content-disposition header. Returned on download.

--content-encoding string Set the content-encoding header. Returned on download.

--content-language string Set the content-language header. Returned on download.

--content-type string Specifies the content type of the file. Implies no-guess-mime-type. Returned on download.

--cpk-by-name string Client provided key by name let clients making requests against Azure Blob storage an option to provide an encryption key on a per-request basis. Provided key name will be fetched from Azure Key Vault and will be used to encrypt the data

--cpk-by-value Client provided key by name let clients making requests against Azure Blob storage an option to provide an encryption key on a per-request basis. Provided key and its hash will be fetched from environment variables

--decompress Automatically decompress files when downloading, if their content-encoding indicates that they are compressed. The supported content-encoding values are 'gzip' and 'deflate'. File extensions of '.gz'/'.gzip' or '.zz' aren't necessary, but will be removed if present.

--disable-auto-decoding False by default to enable automatic decoding of illegal chars on Windows. Can be set to true to disable automatic decoding.

--dry-run Prints the file paths that would be copied by this command. This flag does not copy the actual files.

--exclude-attributes string (Windows only) Exclude files whose attributes match the attribute list. For example: A;S;R

--exclude-blob-type string Optionally specifies the type of blob (BlockBlob/ PageBlob/ AppendBlob) to exclude when copying blobs from the container or the account. Use of this flag is not applicable for copying data from non azure-service to service. More than one blob should be separated by ';'.

--exclude-path string Exclude these paths when copying. This option does not support wildcard characters (*). Checks relative path prefix(For example: myFolder;myFolder/subDirName/file.pdf). When used in combination with account traversal, paths do not include the container name.

--exclude-pattern string Exclude these files when copying. This option supports wildcard characters (*)

--exclude-regex string Exclude all the relative path of the files that align with regular expressions. Separate regular expressions with ';'.

--follow-symlinks Follow symbolic links when uploading from local file system.

--force-if-read-only When overwriting an existing file on Windows or Azure Files, force the overwrite to work even if the existing file has its read-only attribute set

--from-to string Optionally specifies the source destination combination. For Example: LocalBlob, BlobLocal, LocalBlobFS. Piping: BlobPipe, PipeBlob

-h, --help help for copy

--include-after string Include only those files modified on or after the given date/time. The value should be in ISO8601 format. If no timezone is specified, the value is assumed to be in the local timezone of the machine running AzCopy. E.g. '2020-08-19T15:04:00Z' for a UTC time, or '2020-08-19' for midnight (00:00) in the local timezone. As of AzCopy 10.5, this flag applies only to files, not folders, so folder properties won't be copied when using this flag with --preserve-smb-info or --preserve-smb-permissions.

--include-attributes string (Windows only) Include files whose attributes match the attribute list. For example: A;S;R

--include-before string Include only those files modified before or on the given date/time. The value should be in ISO8601 format. If no timezone is specified, the value is assumed to be in the local timezone of the machine running AzCopy. E.g. '2020-08-19T15:04:00Z' for a UTC time, or '2020-08-19' for midnight (00:00) in the local timezone. As of AzCopy 10.7, this flag applies only to files, not folders, so folder properties won't be copied when using this flag with --preserve-smb-info or --preserve-smb-permissions.

--include-directory-stub False by default to ignore directory stubs. Directory stubs are blobs with metadata 'hdi_isfolder:true'. Setting value to true will preserve directory stubs during transfers.

--include-path string Include only these paths when copying. This option does not support wildcard characters (*). Checks relative path prefix (For example: myFolder;myFolder/subDirName/file.pdf).

--include-pattern string Include only these files when copying. This option supports wildcard characters (*). Separate files by using a ';'.

--include-regex string Include only the relative path of the files that align with regular expressions. Separate regular expressions with ';'.

--list-of-versions string Specifies a file where each version id is listed on a separate line. Ensure that the source must point to a single blob and all the version ids specified in the file using this flag must belong to the source blob only. AzCopy will download the specified versions in the destination folder provided.

--metadata string Upload to Azure Storage with these key-value pairs as metadata.

--no-guess-mime-type Prevents AzCopy from detecting the content-type based on the extension or content of the file.

--overwrite string Overwrite the conflicting files and blobs at the destination if this flag is set to true. (default 'true') Possible values include 'true', 'false', 'prompt', and 'ifSourceNewer'. For destinations that support folders, conflicting folder-level properties will be overwritten this flag is 'true' or if a positive response is provided to the prompt. (default "true")

--page-blob-tier string Upload page blob to Azure Storage using this blob tier. (default 'None'). (default "None")

--preserve-last-modified-time Only available when destination is file system.

--preserve-owner Only has an effect in downloads, and only when --preserve-smb-permissions is used. If true (the default), the file Owner and Group are preserved in downloads. If set to false, --preserve-smb-permissions will still preserve ACLs but Owner and Group will be based on the user running AzCopy (default true)

--preserve-permissions False by default. Preserves ACLs between aware resources (Windows and Azure Files, or ADLS Gen 2 to ADLS Gen 2). For Hierarchical Namespace accounts, you will need a container SAS or OAuth token with Modify Ownership and Modify Permissions permissions. For downloads, you will also need the --backup flag to restore permissions where the new Owner will not be the user running AzCopy. This flag applies to both files and folders, unless a file-only filter is specified (e.g. include-pattern).

--preserve-posix-properties 'Preserves' property info gleaned from stat or statx into object metadata.

--preserve-smb-info For SMB-aware locations, flag will be set to true by default. Preserves SMB property info (last write time, creation time, attribute bits) between SMB-aware resources (Windows and Azure Files). Only the attribute bits supported by Azure Files will be transferred; any others will be ignored. This flag applies to both files and folders, unless a file-only filter is specified (e.g. include-pattern). The info transferred for folders is the same as that for files, except for Last Write Time which is never preserved for folders. (default true)

--put-md5 Create an MD5 hash of each file, and save the hash as the Content-MD5 property of the destination blob or file. (By default the hash is NOT created.) Only available when uploading.

--recursive Look into sub-directories recursively when uploading from local file system.

--s2s-detect-source-changed Detect if the source file/blob changes while it is being read. (This parameter only applies to service to service copies, because the corresponding check is permanently enabled for uploads and downloads.)

--s2s-handle-invalid-metadata string Specifies how invalid metadata keys are handled. Available options: ExcludeIfInvalid, FailIfInvalid, RenameIfInvalid. (default 'ExcludeIfInvalid'). (default "ExcludeIfInvalid")

--s2s-preserve-access-tier Preserve access tier during service to service copy. Please refer to [Azure Blob storage: hot, cool, and archive access tiers](https://docs.microsoft.com/azure/storage/blobs/storage-blob-storage-tiers) to ensure destination storage account supports setting access tier. In the cases that setting access tier is not supported, please use s2sPreserveAccessTier=false to bypass copying access tier. (default true). (default true)

--s2s-preserve-blob-tags Preserve index tags during service to service transfer from one blob storage to another

--s2s-preserve-properties Preserve full properties during service to service copy. For AWS S3 and Azure File non-single file source, the list operation doesn't return full properties of objects and files. To preserve full properties, AzCopy needs to send one additional request per object or file. (default true)

Flags Applying to All Commands:

--cap-mbps float Caps the transfer rate, in megabits per second. Moment-by-moment throughput might vary slightly from the cap. If this option is set to zero, or it is omitted, the throughput isn't capped.

--log-level string Define the log verbosity for the log file, available levels: INFO(all requests/responses), WARNING(slow responses), ERROR(only failed requests), and NONE(no output logs). (default 'INFO'). (default "INFO")

--output-level string Define the output verbosity. Available levels: essential, quiet. (default "default")

--output-type string Format of the command's output. The choices include: text, json. The default value is 'text'. (default "text")

--trusted-microsoft-suffixes string Specifies additional domain suffixes where Azure Active Directory login tokens may be sent. The default is '*.core.windows.net;*.core.chinacloudapi.cn;*.core.cloudapi.de;*.core.usgovcloudapi.net;*.storage.azure.net'. Any listed here are added to the default. For security, you should only put Microsoft Azure domains here. Separate multiple entries with semi-colons.

wrong number of arguments, please refer to the help page on usage of this command

結構一杯あります。

これから Windows Serverのローカルファイルを Azuriteの Blobコンテナー(testcontainer)に入れてから、逆にそれを取ってくる作業をします。

SASを使って認証を行うので、使うのは上の取扱説明のピンクの行です。

aaa.txtというファイルを aaa.txtという Blobにします。

D:\work>azcopy cp "aaa.txt" "http://devstoreaccount1.UbuntuServer2204:10000/testcontainer/aaa.txt?sv=2021-08-06&ss=btqf&srt=sco&st=2022-11-01T10%3A18%3A36Z&se=2052-11-02T10%3A18%3A00Z&sp=rwdxftlacup&sig=xoNeblE7kxo%2FXxo5wHvyYrUGNBzpGjkD4DJhiVc64g0%3D"

INFO: The parameters you supplied were Source: 'aaa.txt' of type Local, and Destination: 'http://devstoreaccount1.UbuntuServer2204:10000/testcontainer/aaa.txt?se=2052-11-02T10%3A18%3A00Z&sig=-REDACTED-&sp=rwdxftlacup&srt=sco&ss=btqf&st=2022-11-01T10%3A18%3A36Z&sv=2021-08-06' of type Local

INFO: Based on the parameters supplied, a valid source-destination combination could not automatically be found. Please check the parameters you supplied. If they are correct, please specify an exact source and destination type using the --from-to switch. Valid values are two-word phases of the form BlobLocal, LocalBlob etc. Use the word 'Blob' for Blob Storage, 'Local' for the local file system, 'File' for Azure Files, and 'BlobFS' for ADLS Gen2. If you need a combination that is not supported yet, please log an issue on the AzCopy GitHub issues list.

failed to parse user input due to error: the inferred source/destination combination could not be identified, or is currently not supported

何故か失敗してしまいます。

どうも Azuriteの URLだとそれが Blobなのかどうか分からないようです。

上の取扱説明の黄色の行のオプションを付けると上手く行きます。

--from-to LocalBlob でローカル→Blobという意味のようです。

D:\work>azcopy cp "aaa.txt" "http://devstoreaccount1.UbuntuServer2204:10000/testcontainer/aaa.txt?sv=2021-08-06&ss=btqf&srt=sco&st=2022-11-01T10%3A18%3A36Z&se=2052-11-02T10%3A18%3A00Z&sp=rwdxftlacup&sig=xoNeblE7kxo%2FXxo5wHvyYrUGNBzpGjkD4DJhiVc64g0%3D" --from-to LocalBlob

INFO: Scanning...

INFO: NOTE: HTTP is in use for one or more location(s). The use of HTTP is not recommended due to security concerns.

INFO: Any empty folders will not be processed, because source and/or destination doesn't have full folder support

Job f8e347b0-c6a7-2244-5c0c-2fe4c1775213 has started

Log file is located at: C:\Users\Administrator\.azcopy\f8e347b0-c6a7-2244-5c0c-2fe4c1775213.log

100.0 %, 1 Done, 0 Failed, 0 Pending, 0 Skipped, 1 Total, 2-sec Throughput (Mb/s): 0

Job f8e347b0-c6a7-2244-5c0c-2fe4c1775213 summary

Elapsed Time (Minutes): 0.0336

Number of File Transfers: 1

Number of Folder Property Transfers: 0

Total Number of Transfers: 1

Number of Transfers Completed: 1

Number of Transfers Failed: 0

Number of Transfers Skipped: 0

TotalBytesTransferred: 3

Final Job Status: Completed

上手く行きました。

aaa.txtを消して、今度は Blobからローカルに持ってきます。

--from-to BlobLocal に変えています。

D:\work>del aaa.txt

D:\work>azcopy cp "http://devstoreaccount1.UbuntuServer2204:10000/testcontainer/aaa.txt?sv=2021-08-06&ss=btqf&srt=sco&st=2022-11-01T10%3A18%3A36Z&se=2052-11-02T10%3A18%3A00Z&sp=rwdxftlacup&sig=xoNeblE7kxo%2FXxo5wHvyYrUGNBzpGjkD4DJhiVc64g0%3D" "aaa.txt" --from-to BlobLocal

INFO: Scanning...

INFO: NOTE: HTTP is in use for one or more location(s). The use of HTTP is not recommended due to security concerns.

INFO: Any empty folders will not be processed, because source and/or destination doesn't have full folder support

Job 2bf8adb6-18c6-4c42-620e-e11ad6b8a2ab has started

Log file is located at: C:\Users\Administrator\.azcopy\2bf8adb6-18c6-4c42-620e-e11ad6b8a2ab.log

100.0 %, 1 Done, 0 Failed, 0 Pending, 0 Skipped, 1 Total, 2-sec Throughput (Mb/s): 0

Job 2bf8adb6-18c6-4c42-620e-e11ad6b8a2ab summary

Elapsed Time (Minutes): 0.0334

Number of File Transfers: 1

Number of Folder Property Transfers: 0

Total Number of Transfers: 1

Number of Transfers Completed: 1

Number of Transfers Failed: 0

Number of Transfers Skipped: 0

TotalBytesTransferred: 3

Final Job Status: Completed

D:\work>dir aaa.txt

ドライブ D のボリューム ラベルは ボリューム です

ボリューム シリアル番号は 10FB-CD4D です

D:\work のディレクトリ

2022/11/01 20:31 3 aaa.txt

1 個のファイル 3 バイト

0 個のディレクトリ 50,612,379,648 バイトの空き領域

上手く行きましたね。

作られたBlob(aaa.txt)は、Storege Explorerでもその存在を確認できます。

Storage Explorerを使っていて気付いたんですが、作業した後にその作業に使ったコマンドをコピーする機能がありました。

書式からすると Powershellのものと思います。

これが aaa.txtを削除したときのものです。

$env:AZCOPY_CONCURRENCY_VALUE = "AUTO";

$env:AZCOPY_CRED_TYPE = "Anonymous";

./azcopy.exe remove "http://devstoreaccount1.ubuntuserver2204:10000/testcontainer/aaa.txt?sv=2018-03-28&se=2022-12-01T11%3A50%3A03Z&sr=c&sp=rdl&sig=0W%2Br2RwfPKUGAfFmyiyd35HFRuXc0CdPK%2B8L%2F7iZFOw%3D" --from-to=BlobTrash --recursive --log-level=INFO;

$env:AZCOPY_CONCURRENCY_VALUE = "";

$env:AZCOPY_CRED_TYPE = "";

たった一つのコマンドを実行するのにえらく手間がかかってしまいましたが、どうにかなりました。

最後までやってみて今更ですが正直 azコマンドと大して変わらなくね? とも思ってしまいました。

まぁ、実体が Pythonの azのセットと違ってこちらは exeファイル1つですからね。

インストールもいりませんし。

本当に「Blobを使うだけ〜」というサーバーには、こちらをオススメですかねぇ。

どちらかというと AzCopy よりも Storage Explorer の方に感心してしまったのは秘密です。

az / azcopy に関わらず、スクリプトを書く時にこのような長々とした URLや SASを一々書いていられませんから、変数を使ってスッキリとしたいですね。

今回 azcopy.exeの返り値までは検証できてないんですが、失敗はコマンドの戻りで検知できるものと思います。

azcopyには、syncなんてサブコマンドがありますので、バックアップ用途として利用すると良さげな感じがしますね〜。